High throughput experimentation (HTE) enables efficient AI/ML dataset production and benefits from AI-driven experiment design. This case study presents how leading pharma firms are using Katalyst D2D software to streamline workflows, generate high-quality datasets, and apply integrated machine learning to reduce experimental workload while enhancing data-driven insights for discovery and process chemistry

The last decade has seen a resurgence of interest in automating and parallelizing chemistry. HTE accelerates reaction optimisation by enabling miniaturised reactions that consume less material and run in parallel. HTE provides data for reaction optimisation faster than traditional sequential experimentation and is ideal for producing comprehensive datasets for machine learning (ML). Pharmaceutical R&D organisations have invested in hardware to automate reaction execution and software to streamline these data-rich workflows.

Fragmented software across the workflow is particularly challenging in HTE given the large volumes of data generated. HTE teams at leading pharmaceutical organisations have adopted Katalyst D2D to integrate hardware and software into a single interface. The software has helped streamline parallel chemistry workflows, automate the collection and correlation of results, and ensure consistent data management. Users have been able to produce data for data-hungry AI/ML applications and investigate leveraging it.

We explore how teams at three top pharmaceutical organisations are preparing to leverage HTE data for AI/ML and achieving significant efficiency gains.

Satisfying AI/ML data requirements

ML models that predict reaction outcomes are an excellent way to accelerate drug discovery. Robust predictive AI/ML models for the prediction of successful synthetic experiments require:

- High quality data

- Consistent acquisition and processing of data

- Negative and positive results

- Reaction conditions, yields, and side-product formation

Generating consistent data

Company #1 took the first step of unifying their HTE data in a single solution with Katalyst D2D four years ago, looking to arrive at answers more quickly and leverage AI/ML to help design experiments. Having expanded HTE across discovery and process chemistry, they are now starting to pull data out of the software as JSON files and push it into data visualisation tools like Spotfire to understand trends and investigate data science applications.

AI/ML dataset production

Company #2 began to mine data from Katalyst D2D for AI/ML applications within a year of deployment and have pipelined it into a data lake for AI/ML use. They almost doubled their HTE user base in 24 months (from 77 to >130 users) and averaged 560 HT experiments a year. The consistent, complete, and contextual data acquired and managed in Katalyst has provided Company #2 with the ability to explore leveraging HTE data in AI/ML applications.

“Katalyst ensures that all the criteria for building robust predictive AI/ML models are met for our HTE screens. Katalyst’s ability to help scientists easily design large arrays of experiments and record data in a consistent fashion holds great promise for building efficient machine learning models.” - Head of HTE, Company #2

ML model building

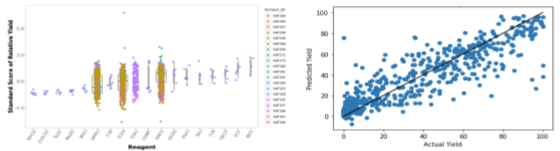

Company #3 generated and analysed 3000 data points from an HTE amide coupling study to build a “proof of concept” machine learning model for reaction yield prediction (Figure 1).

Figure 1. ~3000 data points generated for amide coupling (left) were used to create a preliminary machine learning model for predicting reaction yield (right scatter plot)

“The robust database and data architecture [of Katalyst D2D] allows you to go from an experiment to a data science model very quickly, without the need to do very tedious data cleaning tasks. From a data science perspective, the data goes directly from running the experiment to being written to the Katalyst database. The data can be pulled from there into Python to then do your data science workflows, almost seamlessly.” - Associate Scientific Director, Company #3

Leveraging AI in experiment design

More recently, Company #3 collaborated with the Doyle lab at UCLA to create a Bayesian Optimisation algorithm that could be applied to exploit known reaction information in the design of HT experiments. The resulting Experiment Design Bayesian Optimiser (EDBO+) algorithm was integrated into Katalyst.

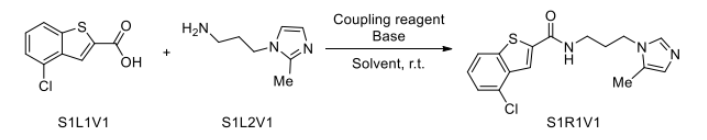

The EDBO+ algorithm was tested using a common amide coupling reaction (Figure 2). The objective, to identify reaction parameters for maximum yield and minimal impurities.

Figure 2. Amide coupling reaction used to test AI-enabled reaction design in Katalyst D2D software

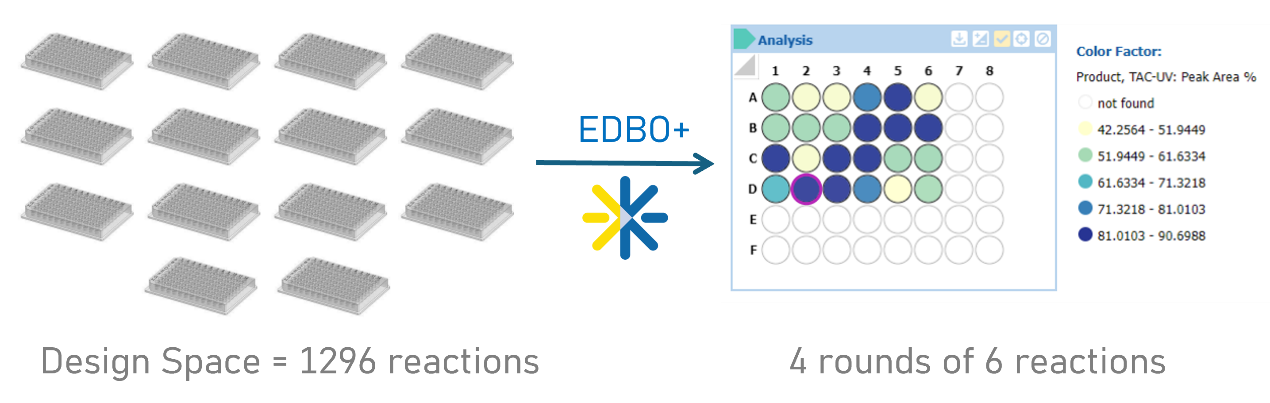

20 reaction variables (different solvents, coupling reagents, bases, and a variety of reaction concentrations and reagent equivalents) represent a total design space of 1296 reactions. Investigating these experimentally would require 14 rounds of experiments in 96-well plates which is both time-consuming and requires a significant amount of material.

Results from AI-enabled experiment design

Figure 3. The machine learning algorithm (EDBO+) integrated in Katalyst enabled optimal reaction conditions to be identified in 24 reactions instead of the full factorial of 1296 reactions

Using the EDBO+ algorithm, the group identified optimal conditions for the amidation in 24 reactions (four rounds of six) - a fraction (2%) of the full factorial 1296 design space. These savings of time, effort, and consumables represent the benefits researchers are hoping to realise from leveraging AI/ML technologies.

The future of data-driven R&D

HTE lends itself to consistent and efficient dataset production for AI/ML. In turn, integration of these technologies into HT workflows delivers measurable results. Teams are streamlining workflows and improving efficiency to accelerate time-to-market. As AI-driven approaches continue to evolve, the synergy between automation and data science will unlock new avenues of application, enabling scientists to push the boundaries of discovery to make smarter, data-informed decisions.