Carlo Ruiz, EMEA director of AI Data Center Solutions at Nvidia gives his thoughts on the rising popularity of Conversational AI

There are lots of reasons why conversational AI is the talk of the town.

It can turn speech into text that’s searchable. It morphs text into speech you can listen to hands-free while working or driving.

As it gets smarter, it’s understanding more of what it hears and reads, making it even more useful. That’s why the word is spreading fast.

Conversational AI is perhaps best known as the language of Siri and Alexa, but high-profile virtual assistants share the stage with a growing chorus of agents.

In 2020, the shift toward working from home, telemedicine and remote learning has created a surge in demand for custom, language-based AI services, ranging from customer support to real-time transcriptions and summarisation of video calls to keep people productive and connected.

Businesses are using the technology to manage contracts. Doctors use it to take notes during patient exams. And a laundry list of companies are tapping it to improve customer support.

Conversational AI is central to the future of many industries, as applications gain the ability to understand and communicate with nuance and contextual awareness.

But until now, developers of language-processing neural networks that power real-time speech applications have faced an unfortunate trade-off: Be quick and you sacrifice the quality of the response; craft an intelligent response and you’re too slow.

That’s because human conversation is incredibly complex. Every statement builds on shared context and previous interactions. From inside jokes to cultural references and wordplay, humans speak in highly nuanced ways without skipping a beat. Each response follows the last, almost instantly. Friends anticipate what the other will say before words even get uttered.

Introducing Nvidia Jarvis

Nvidia Jarvis is a GPU-accelerated application framework that allows companies to use video and speech data to build state-of-the-art conversational AI services customised for their own industry, products, and customers.

Jarvis provides a complete, GPU-accelerated software stack and tools making it easy for developers to create, deploy and run end-to-end, real-time conversational AI applications that can understand terminology unique to each company and its customers.

To offer an interactive, personalised experience, companies need to train their language-based applications on data that is specific to their own product offerings and customer requirements. However, building a service from scratch requires deep AI expertise, large amounts of data and compute resources to train the models, and software to regularly update models with new data.

Jarvis addresses these challenges by offering an end-to-end deep learning pipeline for conversational AI. It includes state-of-the-art deep learning models, such as Nvidia's Megatron BERT for natural language understanding. Enterprises can further fine-tune these models on their data using Nvidia NeMo, optimise for inference using TensorRT, and deploy in the cloud and at the edge using Helm charts available on NGC, Nvidia’s catalogue of GPU-optimised software.

Early Adopters — Voca, Kensho

Companies worldwide are using Nvidia's conversational AI platform to improve their services.

Among the first companies to take advantage of Jarvis-based conversational AI products and services for their customers are Voca, an AI agent for call centre support; Kensho, for automatic speech transcriptions for finance and business; and Square, with its virtual assistant for appointment scheduling.

Voca’s AI virtual agents — which use Nvidia for faster, more interactive, human-like engagements — are used by Toshiba, AT&T and other world-leading companies. Voca uses AI to understand the full intent of a customer’s spoken conversation and speech. This makes it possible for the agents to automatically identify different tones and vocal clues to discern between what a customer says and what a customer means. Additionally, using scalability features built into Nvidia's AI platform, they can dramatically reduce customer wait time.

'Low latency is critical in call centres and with Nvidia GPUs our agents are able to listen, understand and respond in under a second with the highest levels of accuracy,' said Alan Bekker, co-founder and CTO of Voca. 'Now our virtual agents are able to successfully handle 70-80 per cent of all calls — ranging from general customer service requests to payment transactions and technical support.'

Kensho, the innovation hub for S&P Global located in Cambridge, Massachusetts, that deploys scalable machine learning and analytics systems, has used Nvidia's conversational AI to develop Scribe, a speech recognition solution for finance and business. With Nvidia, Scribe outperforms other commercial solutions on earnings calls and similar financial audio in terms of accuracy by a margin of up to 20 per cent.

'We’re working closely with Nvidia on ways to push end-to-end automatic speech recognition with deep learning even further,' said Georg Kucsko, head of AI research at Kensho. 'By training new models with Nvidia, we’re able to offer higher transcription accuracy for financial jargon compared to traditional approaches that do not use AI, offering our customers timely information in minutes versus days.'

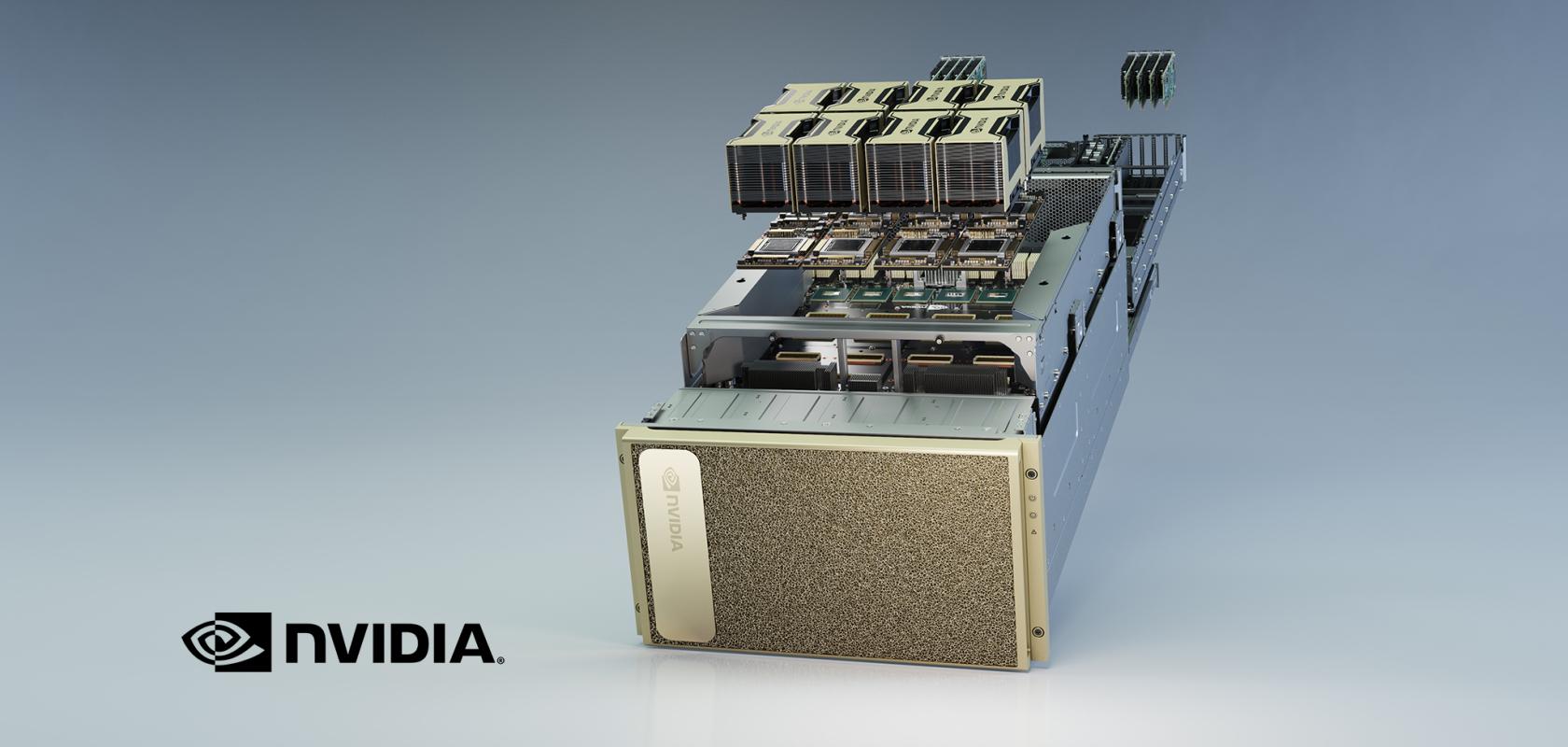

Full Speed Ahead with DGX A100

Applications built with Jarvis can take advantage of innovations in the new Nvidia A100 Tensor Core GPU for AI computing and the latest optimisations in Nvidia TensorRT for inference. For the first time, it’s now possible to run an entire multimodal application, using the most powerful vision and speech models, faster than the 300-millisecond threshold for real-time interactions.

Nvidia DGX A100 is the universal system for all AI workloads, offering unprecedented compute density, performance, and flexibility in the world’s first 5 petaFLOPS AI system. Nvidia DGX A100 features the world’s most advanced accelerator, the NVIDIA A100 Tensor Core GPU, enabling enterprises to consolidate training, inference, and analytics into a unified, easy-to-deploy AI infrastructure that includes direct access to Nvidia AI experts.